The rise of large language models (LLMs) has revolutionized natural language processing (NLP) capabilities. However, their immense size and computational demands often limit their accessibility and sustainability. This landmark paper introduces a cutting-edge paradigm in language model design through Super Tiny Language Models (STLMs), offering a compelling solution by achieving competitive performance with significantly reduced parameter counts, effectively mitigating larger alternatives’ computational and energy-intensive challenges. Here’s an in-depth exploration:

Introduction

The advent of large language models (LLMs) like GPT-3 has markedly advanced natural language processing (NLP) capabilities, driving significant improvements in machine translation, customer service automation, content generation, and more. However, these advancements come with high computational and energy demands, making them less accessible and sustainable for widespread use, especially for small to medium-sized enterprises (SMEs) and organizations operating in regions with limited resources.

STLMs aim to sustain comparable performance levels while slashing parameter counts by up to 95%. This results in models that can deliver robust outcomes without the prohibitive costs and energy consumption associated with larger models.

Key Techniques

STLMs leverage several innovative techniques to achieve their remarkable efficiency. Weight tying, for instance, shares parameters across different parts of the model, reducing complexity while maintaining performance. Byte-level tokenization further minimizes vocabulary size, leading to leaner and faster models. Additionally, efficient training strategies like self-play and alternative objectives enable effective learning with fewer resources.

Benefits

The reduced parameter counts of STLMs translate into numerous advantages. Lower computational and energy requirements make them environmentally friendly and cost-effective, opening up NLP capabilities to a wider audience. This increased accessibility allows researchers and industry practitioners to explore NLP applications with less overhead. Furthermore, the reduced complexity of STLMs facilitates faster experimentation and development cycles, accelerating innovation and deployment.

Challenges

Despite their significant potential, STLMs face certain challenges. Ensuring that smaller models can compete with larger ones in terms of accuracy remains a crucial hurdle. High-quality training data is also essential for STLMs to perform well despite their reduced size, necessitating careful data selection and knowledge distillation techniques.

Case Studies

Real-world examples like TinyLlama, Phi-3-mini, and MobiLlama demonstrate that STLMs can achieve competitive performance with significantly fewer parameters. These successful applications showcase the viability of STLMs in various real-world NLP tasks, including:

- Machine translation: STLMs can translate text between languages with high accuracy and efficiency, making communication and information sharing more accessible.

- Text summarization: STLMs can condense large amounts of text into concise summaries, saving time and improving information comprehension.

- Question answering: STLMs can answer questions based on factual knowledge, providing users with quick and accurate information.

- Chatbots: STLMs can power chatbots to engage in natural and informative conversations with users, improving customer service and information delivery.

Technical Implementation

The success of STLMs hinges on several key technical aspects:

- High-quality training data: Data selection and knowledge distillation techniques play crucial roles in ensuring model efficacy, even with smaller parameter sizes.

- State-of-the-art transformer architecture: STLMs incorporate advanced transformer techniques to maximize performance while minimizing resource usage.

- Rigorous evaluation methods: Standard NLP benchmarks are used to ensure that STLMs meet stringent quality and efficacy standards.

Conclusion

The paper underscores STLMs’ enormous potential to create sustainable and efficient high-performance language models and expand their application across various domains. STLMs offer a promising path toward democratizing NLP by making high-performance language models more accessible, efficient, and sustainable. As research and development in this area continue to evolve, STLMs have the potential to transform the landscape of NLP, enabling a broader range of applications and fostering innovation across diverse domains.

Importance for Businesses

This research is beneficial and essential for businesses aiming to curtail operational costs and enhance efficiency through AI implementation. STLMs present a unique opportunity to achieve these goals, circumventing the considerable costs linked to larger models. Here’s the significance of STLM adoption for businesses:

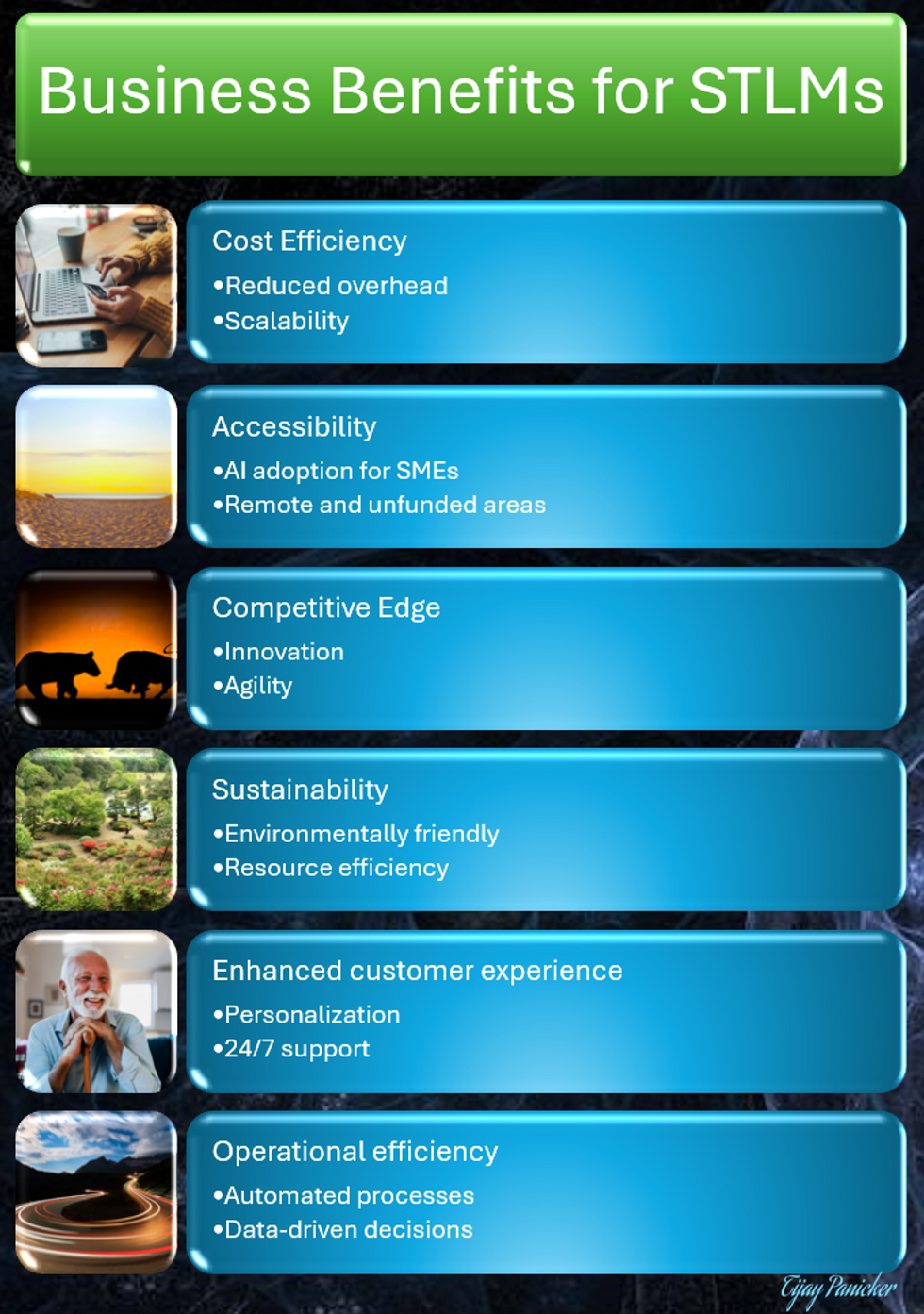

Benefits for Businesses

Cost Efficiency:

- Reduced Overhead: Diminished computational requirements translate to lower server, infrastructure, and energy expenses.

- Scalability: Enables affordable scaling of AI capabilities without huge budget increments.

Accessibility:

- AI Adoption for SMEs: Facilitates the integration of advanced AI by small and medium-sized enterprises, overcoming previous cost barriers.

- Remote and Underfunded Areas: Businesses in resource-limited or decentralized regions can leverage STLMs for enhanced operations.

Competitive Edge:

- Innovation: Harnessing advanced AI technologies without substantial investments allows businesses to stay at the technological forefront.

- Agility: Swift experimentation cycles enable rapid testing and implementation of AI-driven strategies, facilitating quick adaptation to market dynamics.

Sustainability:

- Environmentally Friendly: Lower energy consumption supports sustainability goals and corporate social responsibility (CSR) initiatives.

- Resource Efficiency: Efficient computational resource utilization promotes more sustainable business models.

Enhanced Customer Experiences:

- Personalization: Efficient customer data management by STLMs leads to personalized experiences, boosting customer satisfaction and retention.

- 24/7 Support: Cost-effective AI solutions enable consistent customer service operations, improving reliability and customer trust.

Operational Efficiency:

- Automated Processes: Streamlining and automating routine tasks minimizes errors and liberates human resources for strategic roles.

- Data-Driven Decisions: Access to sophisticated, cost-effective data analytics empowers informed decision-making.

Business Use Cases

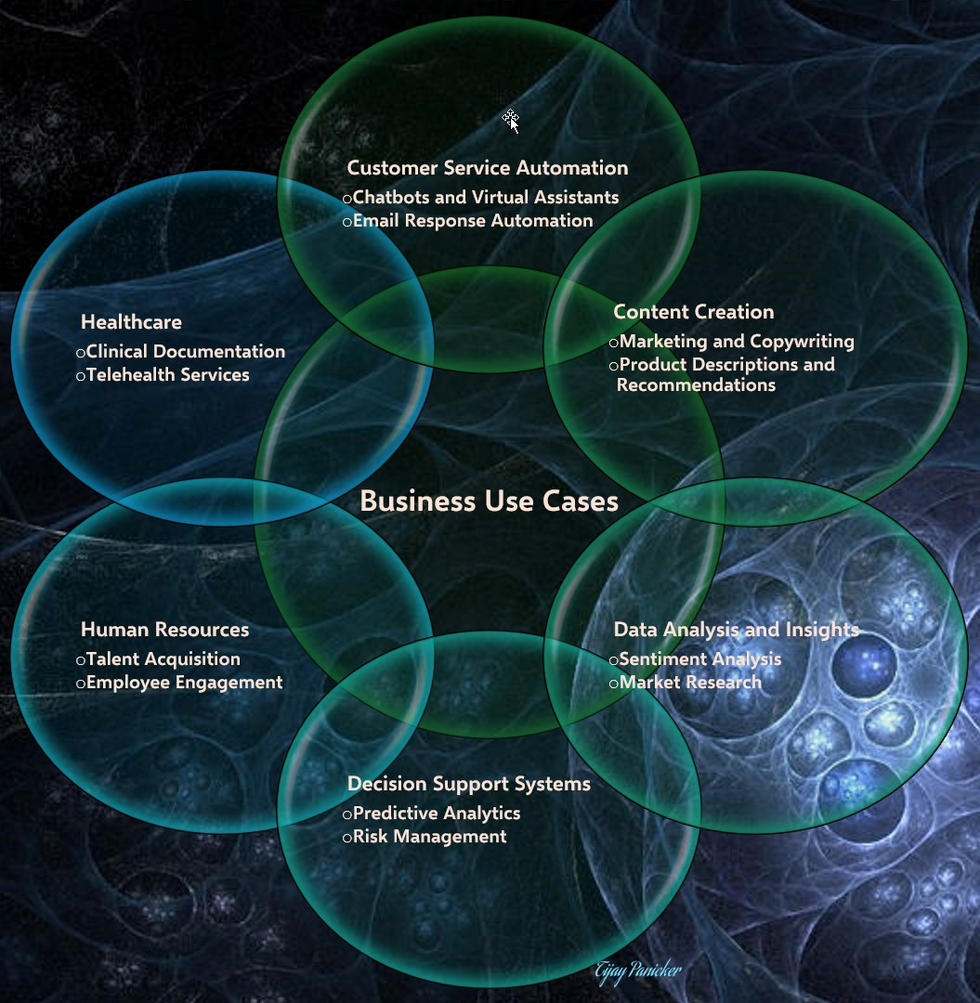

Customer Service Automation:

- Chatbots and Virtual Assistants: Utilizing STLMs to power chatbots can provide responsive and accurate customer support around the clock, reducing the burden on human support teams and improving customer satisfaction.

- Email Response Automation: Automating email responses using STLMs can handle common customer inquiries efficiently, ensuring timely communication.

Content Creation:

- Marketing and Copywriting: Businesses can leverage STLMs to generate high-quality marketing copy, social media posts, and content for websites, saving time and costs associated with content creation.

- Product Descriptions and Recommendations: E-commerce platforms can use STLMs to generate compelling product descriptions and personalized recommendations, enhancing user experience and driving sales.

Data Analysis and Insights:

- Sentiment Analysis: STLMs can be employed to analyze customer feedback and reviews, providing valuable insights into customer sentiment and areas for improvement.

- Market Research: Businesses can use STLMs to process and analyze large volumes of market data, helping to identify trends and make informed strategic decisions.

Decision Support Systems:

- Predictive Analytics: Integrating STLMs into decision support systems can enhance predictive analytics capabilities, aiding in demand forecasting, inventory management, and financial planning.

- Risk Management: Businesses can use STLMs to analyze risk factors and develop mitigation strategies, enhancing overall risk management frameworks.

Human Resources:

- Talent Acquisition: STLMs can streamline recruitment by automating initial candidate screening, parsing resumes, and suggesting the best candidates based on job requirements.

- Employee Engagement: Analyzing employee feedback and sentiment using STLMs can help HR departments develop strategies to improve workplace engagement and productivity.

Healthcare:

- Clinical Documentation: STLMs can assist healthcare providers by automating the creation of clinical documentation, reducing administrative burden and allowing more time for patient care.

- Telehealth Services: Enhancing telehealth services with STLM-powered assistants can improve patient interactions, provide timely health advice, and facilitate follow-up communications.

By adopting STLMs, businesses can unlock new efficiency, innovation, and competitiveness levels, positioning themselves as leaders in their respective markets. These models promise to democratize access to advanced AI, paving the way for a more diverse and inclusive technological landscape.

Attribution:

❖ Leon Guertler, Dylan Hillier, Palaash Agrawal, Chen Ruirui, Bobby Cheng, Cheston Tan

❖ Centre for Frontier AI Research (CFAR), Institute of High-Performance Computing (IHPC), ASTAR*

❖ Published in ArXiv, 2024

From Your Site Articles

Related Articles Around the Web

Source link

All Materials on this website/blog are only for Learning & Educational purposes. It is strictly recommended to buy the products from the original owner/publisher of these products. Our intention is not to infringe any copyright policy. If you are the copyright holder of any of the content uploaded on this site and don’t want it to be here. Instead of taking any other action, please contact us. Your complaint would be honored, and the highlighted content will be removed instantly.